With this new video, the past months of my "Calculus for Machine Learning" videos all start to come together, enabling us to apply the simplest ML model: a regression line fit to individual data points one by one by one.

This simple regression model will enable us, in next week's video, to derive the simplest-possible partial derivatives for calculating a machine learning gradient.

We publish a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday. Playlist is here.

More detail about my broader "ML Foundations" curriculum and all of the associated open-source code is available in GitHub here.

Filtering by Category: Data Science

Skyrocket Your Career by Sharing Your Writing

This week's guest is Khuyen Tran, one of the most preeminent voices of data science: Her blog alone receives over 100k views/month. In this episode, she details how by publishing your writing, you too can skyrocket your career.

Khuyen:

• Became an author for the Towards Data Science blog less than two years ago; already her articles garner 100k+ views per month

• Writes practical daily posts featuring Python code right here on LinkedIn, leading to her developing a highly engaged following of over 25k — in just one year!

• Recently became a technical writer for NVIDIA's Developer Blog

• Has landed four data science jobs, including her current role at Ocelot Consulting, in part thanks to her writing

• Has a perfect 4.0 GPA in the computational and applied mathematics undergraduate degree that she's on track to complete next year

In today's episode, Khuyen fills us in on:

• How publishing your writing can skyrocket your technical career

• Her tricks for maximizing engagement with the content you publish

• Her favorite data science tools and approaches

• Her tricks for prioritizing and being as epically productive as she is across her studies, her data science work, and her prodigious technical writing

And thanks to Krzysztof and Nikolay for the outstanding audience questions that Khuyen addressed on-air.

The episode's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

The Highest-Paying Programming Languages for Data Scientists

This article was originally adapted from a podcast, which you can check out here.

The beloved technical publisher O’Reilly recently released their 2021 Data/AI Salary Survey. It covers responses from 3000 survey respondents in the US combined with another 300 respondents from the UK. All of the respondents are subscribers to O’Reilly’s Data & AI Email Newsletter.

There’s quite a lot of detail in the report on how the salaries of data professionals vary, including by gender, level of education, career stage, industry, and geographic location. Today, I’m focusing on how salaries vary by programming language since this is an attribute that you can easily change about yourself, simply by learning something new.

Read MoreExercises on the Multivariate Chain Rule

Last week's YouTube video detailed how we use the Chain Rule for Multivariate (Partial Derivative) Calculus. This week's video features three exercises to test your comprehension of the topic.

We publish a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday. Playlist is here.

More detail about my broader "ML Foundations" curriculum and all of the associated open-source code is available in GitHub here.

A.I. for Good

This week's guest is the eloquent and inspiring James Hodson, founder and CEO of the AI for Good Foundation, which leverages data and machine learning to tackle the United Nations' Sustainable Development Goals.

In this episode, James details:

• Globally impactful case studies from his A.I. for Good organization across public health, DEI, and a practical database of A.I. progress on social issues

• How you yourself can get involved in helping apply A.I. for wide-reaching social benefit, whether you're a technical expert or not

• The hard and soft skills that he looks for in the data scientists that he hires

In addition to his leadership of A.I. for Good, James:

• Is an academic research fellow at the Jozef Stefan Institute, where he's focused on Natural Language Processing research

• Is Chief Science Officer at Cognism, a British tech startup

• Served as A.I. Research Manager at Bloomberg LP

• Completed a degree at Princeton University focused on Machine Translation

Thank you to Claudia Perlich for the intro to James! I learned a ton from him while filming this episode.

The episode's available on all major podcasting platforms, on YouTube, and at SuperDataScience.com.

Intro to Deep Reinforcement Learning at Columbia University

My goodness did I ever miss lecturing in-person! Was finally back in front of a live classroom on Friday, providing an intro to Deep Reinforcement Learning to engineering graduates at Columbia University in the City of New York.

Thank you Chong and Sam for having me. It's always a delight to lecture to your brilliant ELEN E6885 students, especially now that the pandemic is subsiding and I can interact with them meaningfully again.

You can see the slides from here as well as the associated GitHub repository here.

Fail More

Fail more! Failing is very very good. For Five-Minute Friday this week, I elaborate on why.

SuperDataScience episodes are available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

The Chain Rule for Partial Derivatives

For this week's YouTube video, we apply the Chain Rule (introduced earlier in the series in the single-variable case) to find the partial derivatives of multivariate functions — such as Machine Learning functions!

We publish a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday. Playlist is here.

More detail about my broader "ML Foundations" curriculum and all of the associated open-source code is available in GitHub here.

Does Caffeine Hurt Productivity? (Part 3: Scientific Literature)

For Five-Minute Friday yesterworkday, I concluded my three-part series on whether caffeine disrupts productivity by broadening from the experiment I ran on myself to the academic literature on the topic.

My Jupyter notebook of data and analysis of the caffeine vs productivity experiment I ran on myself is here.

SuperDataScience episodes are available on all major podcasting platforms and YouTube, and on SuperDataScience.com.

Partial Derivative Notation

This week's YouTube video is a quick one on the common options for partial derivative notation.

We publish a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday. Playlist is here.

More detail about my broader "ML Foundations" curriculum and all of the associated open-source code is available in GitHub here.

Accelerating Impact through Community — with Chrys Wu

This week's guest is global tech community builder Chrys Wu who details how you too can leverage communities to accelerate your career. This is the first SuperDataScience episode ever recorded in-person!

In addition to accelerating your career with community, Chrys covers:

• K-pop music and its associated cultural movement

• How the Write/Speak/Code and Hacks/Hackers organizations she co-founded leverage community to make a massive global impact for marginalized genders and journalism, respectively

• Her top resources — social media accounts, blogs, and podcasts — for staying abreast of the latest in data science and machine learning

Chrys is a consultant who specializes in product development and change management. She's also a co-founder of both Write/Speak/Code and Hacks/Hackers, the latter of which has grown to 70 chapters across five continents.

Listen or watch here.

"Math for Machine Learning" course on Udemy complete!

After a year of filming and editing, my "Math for Machine Learning" course on Udemy is complete! To celebrate, we put together this epic video that overviews the 15-hour curriculum in under two minutes.

Over 83,000 students have registered for the course, which provides an introduction to all of the essential Linear Algebra and Calculus that one needs to be an expert Machine Learning practitioner. It's full of hundreds of hands-on code demos in the key Python tensor libraries — NumPy, TensorFlow, and PyTorch — to make learning fun and intuitive.

You can check out the course here.

Advanced Partial-Derivative Exercises

My "Machine Learning Foundations" video this week features fun geometrical examples in order to strengthen your command of the partial derivative theory that we covered in the preceding videos.

We publish a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday. Playlist is here.

More detail about my broader "ML Foundations" curriculum and all of the associated open-source code is available in GitHub here.

Transformers for Natural Language Processing

This week's guest is award-winning author Denis Rothman. He details how Transformer models (like GPT-3) have revolutionized Natural Language Processing (NLP) in recent years. He also explains Explainable AI (XAI).

Denis:

• Is the author of three technical books on artificial intelligence

• His most recent book, "Transformers for NLP", led him to win this year's Data Community Content Creator Award for technical book author

• Spent 25 years as co-founder of French A.I. company Planilog

• Has been patenting A.I. algos such as those for chatbots since 1982

In this episode, Denis fills us in on:

• What Natural Language Processing is

• What Transformer architectures are (e.g., BERT, GPT-3)

• Tools we can use to explain *why* A.I. algorithms provide a particular output

We covered audience questions from Serg, Chiara, and Jean-charles during filming. For those we didn't get to ask, Denis is kindly answering via a LinkedIn post today!

The episode's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

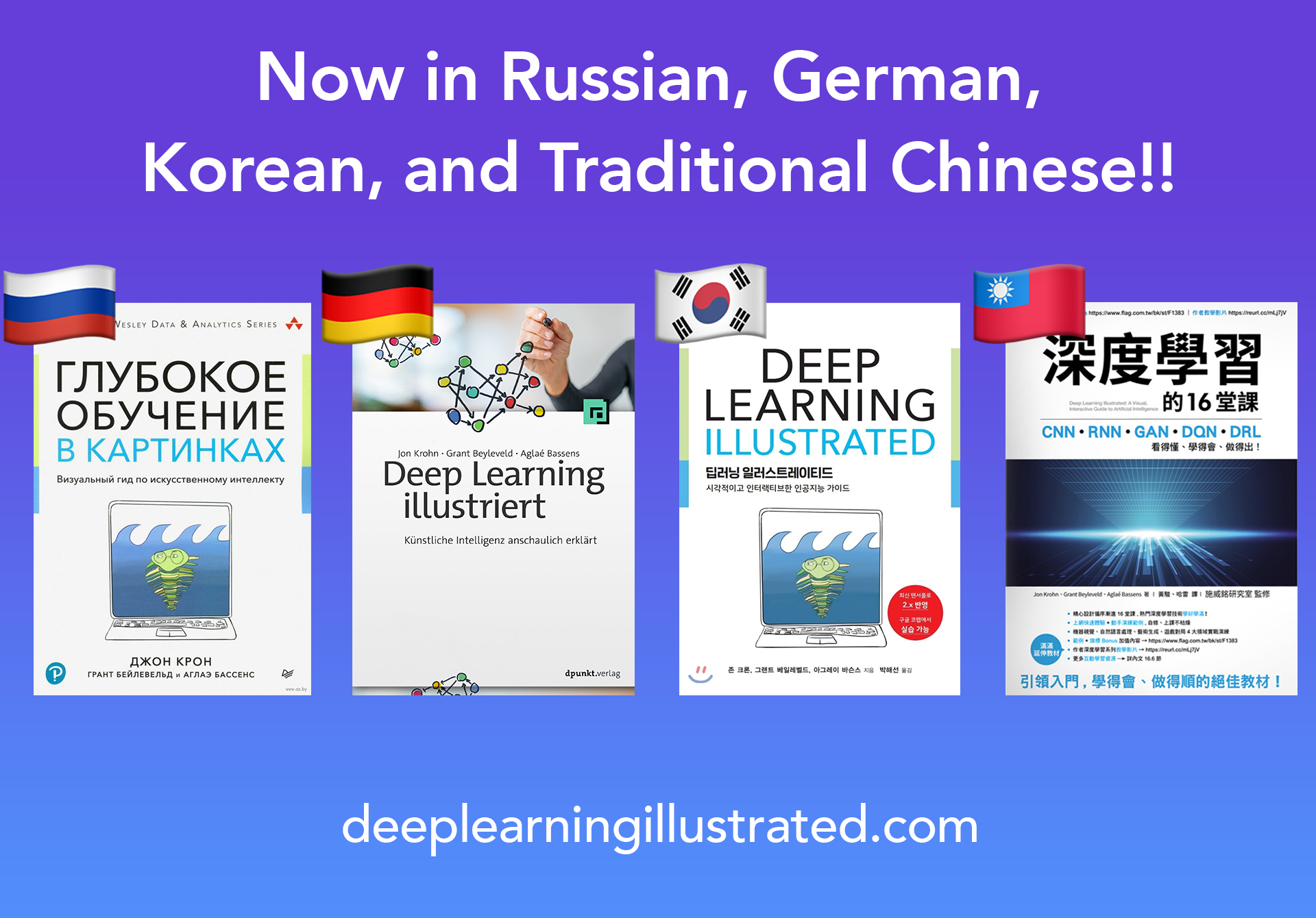

Translations of Deep Learning Illustrated – Now available in Traditional Chinese

My book, Deep Learning Illustrated, recently became available in Traditional Chinese alongside the existing Russian, German, and Korean translations. The new edition instantly became a #1-bestseller in Taiwan.

Thanks to Neville Huang for diligently translating to Traditional Chinese from the original English. Neville also tipped me off to the #1-bestseller status — I've put the screenshot he shared with me on LinkedIn.

Upcoming guest on the SuperDataScience Podcast: Wes McKinney

Next week, I'm interviewing the monumental Wes McKinney — creator of pandas, co-creator of Apache Arrow, and bestselling author of "Python for Data Analysis" — for a SuperDataScience episode.

Got Qs for him? Tweet them @jonkrohnlearns or send them to me on LinkedIn.

Advanced Partial Derivatives

This week's video builds on the preceding ones in my ML Foundations series to advance our understanding of partial derivatives by working through geometric examples. We use paper and pencil as well as Python code.

We publish a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday. Playlist is here.

More detail about my broader "ML Foundations" curriculum and all of the associated open-source code is available in GitHub here.

Data Science for Private Investing — LIVE with Drew Conway

This week's guest is prominent data scientist and author Dr. Drew Conway. Working at Two Sigma, one of the world's largest hedge funds, Drew leads data science for private markets (e.g., real estate, private equity).

If you aren't familiar with Drew already, he:

• Serves as Senior Vice President for data science at Two Sigma

• Co-authored the classic O'Reilly Media book "ML for Hackers"

• Was co-founder and CEO of Alluvium, which was acquired in 2019

• Advised countless successful data-focused startups (e.g., Yhat, Reonomy)

• Obtained a PhD in politics from New York University

In this episode, he covers:

• What private investing is

• How data science can lead to better private investment decisions

• The differences between creating and executing models for public markets (such as stock exchanges) relative to private markets

• What he looks for in the data scientists he hires and how he interviews them

This is a special SuperDataScience episode because it's the first one recorded live in front of an audience (at the The New York R Conference in September). Eloquent Drew was the willing guinea pig for this experiment, which was a great success: We filmed in a single unbroken take and fielded excellent audience questions.

Listen or watch here.

Deep Reinforcement Learning

Five-Minute Friday today is an intro to (deep) reinforcement learning, which has diverse cutting-edge applications: E.g., machines defeating humans at complex strategic games and robotic hands solving Rubik’s cubes.

You can watch or listen here.

O'Reilly + JK October & November ML Foundations LIVE Classes open for registration

We're halfway through my live 14-class "ML Foundations" curriculum, which I'm offering via O'Reilly Media. The first Probability class is on Wednesday with five of the seven remaining classes open for registration now:

• Oct 6 — Intro to Probability

• Oct 13 — Probability II and Information Theory

• Oct 27 — Intro to Statistics

• Nov 3 — Statistics II: Regression and Bayesian

• Nov 17 — Intro to Data Structures and Algorithms

The final two classes will be in December and are on Computer Science topics: Hashing, Trees, Graphs, and Optimization. Registration for them should open soon.

All of the training dates and registration links are provided at jonkrohn.com/talks.

A detailed curriculum and all of the code for my ML Foundations series is available open-source in GitHub here.