Two families of Deep Learning architectures have yielded the lion’s share of recent years' “artificial intelligence” advances. Click through for this blog post, which introduces both families and summarises my newly-released interactive video tutorials on them.

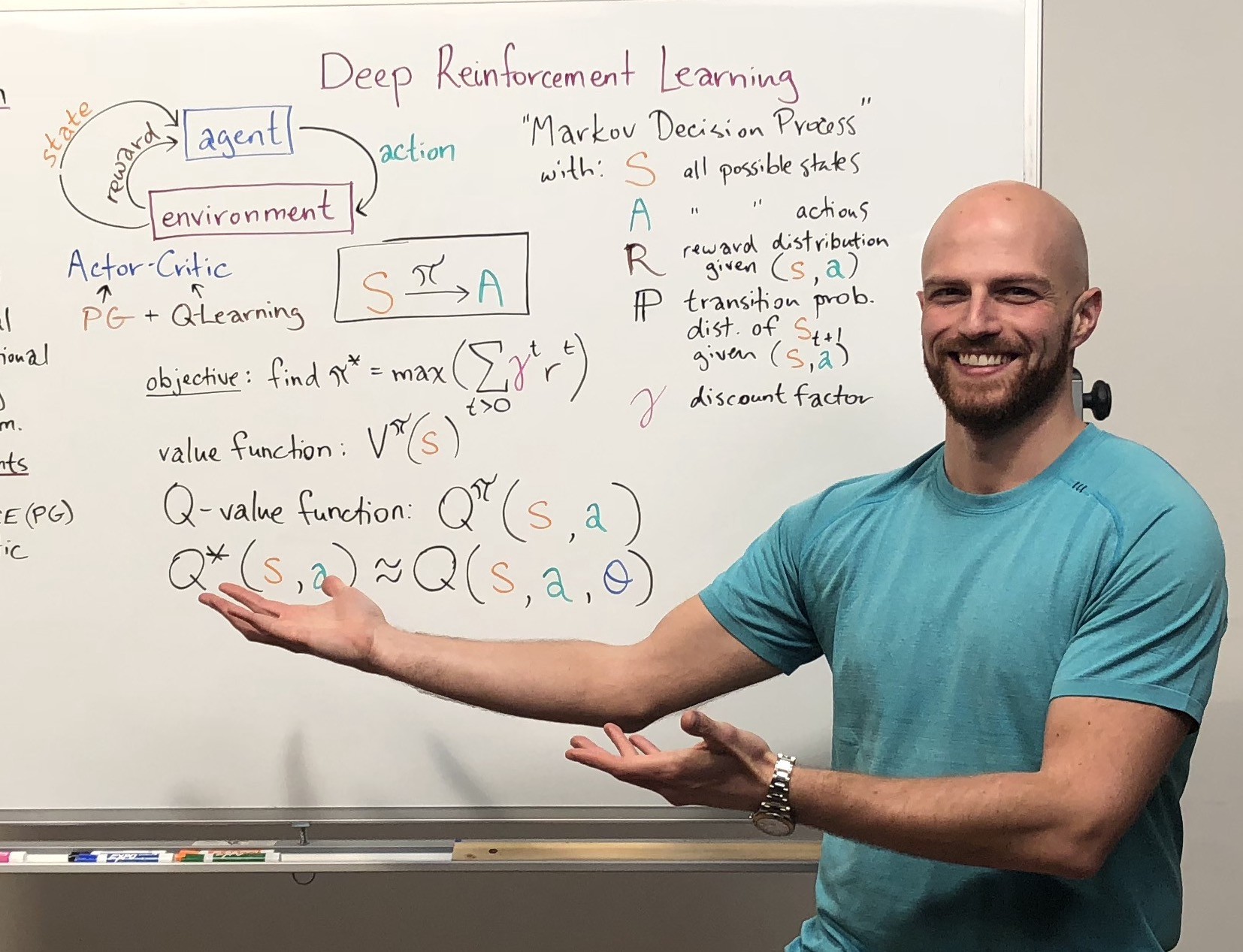

Filming “Deep Reinforcement Learning and Generative Adversarial Network LiveLessons” →

Following on the back of my Deep Learning with TensorFlow and Deep Learning for Natural Language Processing tutorials, I recently recorded a new set of videos covering Deep Reinforcement Learning and Generative Adversarial Networks. These two subfields of deep learning are arguably the most rapidly-evolving so it was a thrill both to learn about these topics and to put the videos together.

“OpenAI Lab” for Deep Reinforcement Learning Experimentation →

With SantaCon’s blizzard-y carnage erupting around untapt’s Manhattan office, the members of the Deep Learning Study Group trekked through snow and crowds of eggnog-saturated merry-makers to continue our Deep Reinforcement Learning journey.

We were fortunate have this session be led by Laura Graesser and Wah Loon Keng. Laura and Keng are the human brains behind the OpenAI Lab Python library for automating experiments that involve Deep RL agents exploring virtual environments. Click through to read more.

"Deep Learning with TensorFlow" is a 2017 Community Favourite

My Deep Learning with TensorFlow tutorial made my publisher's list of their highest-rated content in 2017. To celebrate, the code "FAVE" gets you 60% off the videos until January 8th.

Deep Reinforcement Learning: Our Prescribed Study Path →

At the fifteenth session of our Deep Learning Study Group, we began coverage of Reinforcement Learning. This post introduces Deep Reinforcement Learning and discusses the resources we found most useful for starting from scratch in this space.

"Deep Learning for Natural Language Processing" Video Tutorials with Jupyter Notebooks →

Following on the heels of my acclaimed hands-on introduction to Deep Learning, my Natural Language Processing-specific videos lessons are now available in Safari. The accompanying Jupyter notebooks are available for free in GitHub.

Build Your Own Deep Learning Project via my 30-Hour Course →

My 30-hour Deep Learning course kicks off next Saturday, October 14th at the NYC Data Science Academy. Click through for details of the curriculum, a video interview, and specifics on how I'll lead students through the process of creating their own Deep Learning project, from conception all the way through to completion.

Deep Learning Course Demo

In February, I gave the above talk -- one of my favourites, on the Fundamentals of Deep Learning -- to the NYC Open Data Meetup. Tonight, following months of curriculum development, I'm providing an overview of the part-time Deep Learning course that I'm offering at the Meetup's affiliate, the NYC Data Science Academy, from October through December. The event is sold out, but a live stream will be available from 7pm Eastern Time (link through here).

“Deep Learning with TensorFlow” Introductory Tutorials with Jupyter Notebooks →

My hands-on introduction to Deep Learning is now available in Safari. The accompanying Jupyter notebooks are available for free in GitHub. Below is a free excerpt from the full series of lessons:

How to Understand How LSTMs Work →

In the twelfth iteration of our Deep Learning Study Group, we talked about a number of Deep Learning techniques pertaining to Natural Language Processing, including Attention, Convolutional Neural Networks, Tree Recursive Neural Networks, and Gated Recurrent Units. Our collective effort was primarily focused on a popular variant of the latter -- Long Short-Term Memory units. This blog post provides a high-level overview of what LSTMs are, why they're suddenly ubiquitous, and the resources we recommend working through to understand them.

Filming "Deep Learning with TensorFlow LiveLessons" for Safari Books →

We recently wrapped up filming my five-hour Deep Learning with TensorFlow LiveLessons. These hands-on, introductory tutorials are published by Pearson and will become available in the Safari Books online environment in August.

HackFemme 2017 →

On Friday, I had the humbling experience of contributing to a panel on becoming a champion of diversity at the Coalition for Queens' inaugural (and immaculately-run) HackFemme conference for women in software engineering. The consensus among the panelists (Sarah Manning, Etsy; Patricia Goodwin-Peters, Kate Spade) during the articulately-moderated hour (thanks to Valerie Biberaj) was that much can be accomplished by focusing on the present moment and, for example, speaking up for the softer-voiced contributors to a business discussion.

Deep Learning Study Group XI: Recurrent Neural Networks, including GRUs and LSTMs →

Last week, we welcomed Claudia Perlich and Brian Dalessandro as outstanding speakers at our study session, which focused on deep neural net approaches for handling natural language tasks like sentiment analysis or translation between languages.

Deep Learning Study Group 10: word2vec Mania + Generative Adversarial Networks →

In our tenth session, we delved into the theory and practice of converting human language into fodder for machines. Specifically, we discussed the mathematics and software application of word2vec, a popular approach for converting natural language into a high-dimensional vector space.

Deep Learning Study Group IX: Natural Language Processing, AI in Fashion, and U-Net

In our most recent session, we began learning about word vectors and vector-space embeddings. This is a stepping stone en route to applying Deep Learning techniques to process natural language.

Deep Learning Study Group VIII: Unsupervised Learning, Regularisation, and Venture Capital →

At the eighth iteration of our Deep Learning Study Group, we discussed unsupervised learning techniques and enjoyed presentations from Raffi Sapire and Katya Vasilaky on venture capital investment for machine-learning start-ups and L2 regularisation, respectively.

Fundamental Deep Learning code in TFLearn, Keras, Theano and TensorFlow →

I had a ball giving this talk on Deep Learning hosted by the Open Statistical Programming Meetup at eBay New York last week. It was a packed house, with many thoughtful questions and fruitful discussions. This post summarises the content, and includes the full video, the slides, Jupyter notebooks, and an introduction to the code snippets I presented.

Introductory Talk on Deep Learning at Wilfrid Laurier University →

I gave an introductory talk on the Artificial Intelligence approach Deep Learning last week to the Faculty of Science at Wilfrid Laurier University on what it is, how it's impacting each of us today, and how it is poised to become ever-more uniquitous in the years to come. Here is a summary write-up.

Upcoming Talk: Deep Learning with Artificial Neural Networks →

Next week, I'll be giving an introductory, public lecture on the topics I'm most passionate about -- Deep Learning and AI -- at Wilfrid Laurier University. If you're in Waterloo, Ontario, I'd love to share it with you live -- on January 4th at 4pm. Click through the title for details.

Deep Learning Study Group #6: A History of Machine Vision →

A fortnight ago, our Deep Learning Study Group held a particularly fun and engaging session.

This was our first meeting since completing Michael Nielsen’s Neural Networks and Deep Learning text. Nielsen’s work provided us with a solid foundation for exploring more thoroughly the convolutional neural nets that are the de facto standard in contemporary machine-vision applications.