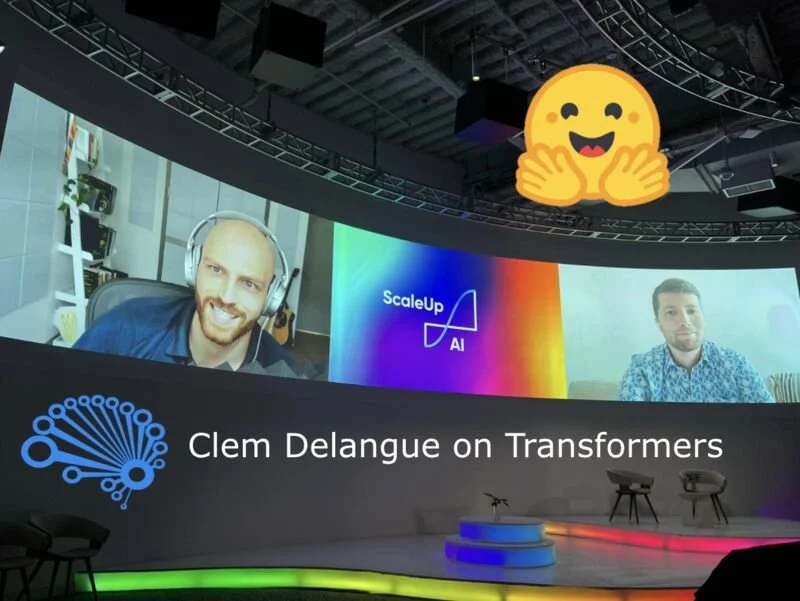

In today's SuperDataScience episode, Hugging Face CEO Clem Delangue fills us in on how open-source transformer architectures are accelerating ML capabilities. Recorded for yesterday's ScaleUp:AI conference in NY.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Filtering by Category: SuperDataScience

How to Rock at Data Science — with Tina Huang

Can you tell I had fun filming this episode with Tina Huang, YouTube data science superstar (293k subscribers)? In it, we laugh while discussing how to get started in data science and her learning/productivity tricks.

Tina:

• Creates YouTube videos with millions of views on data science careers, learning to code, SQL, productivity, and study techniques.

• Is a data scientist at one of the world's largest tech companies (she keeps the firm anonymous so she can publish more freely).

• Previously worked at Goldman Sachs and the Ontario Institute for Cancer Research.

• Holds a Masters in Computer and Information Technology from the University of Pennsylvania and a bachelors in Pharmacology from the University of Toronto

In this episode, Tina details:

• Her guidance for preparing for a career in data science from scratch.

• Her five steps for consistently doing anything.

• Her strategies for learning effectively and efficiently.

• What the day-to-day is like for a data scientist at one of the world’s largest tech companies.

• The software languages she uses regularly.

• Her SQL course.

• How her science and computer science backgrounds help her as a data scientist today.

Today’s episode should be appealing to a broad audience, whether you’re thinking of getting started in data science, are already an experienced data scientist, or you’re more generally keen to pick up career and productivity tips from a light-hearted conversation.

Thanks to Serg Masís, Brindha Ganesan and Ken Jee for providing questions for Tina... in Ken's case, a very silly question indeed.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #8: Math or Computer Science Exercise

This article was originally adapted from a podcast, which you can check out here.

At the beginning of the new year, in Episode #538, I introduced the practice of habit tracking and provided you with a template habit-tracking spreadsheet. Then, we had a series of Five-Minute Fridays that revolved around daily habits I espouse, and that theme continues today. The habits we covered in January and February were related to my morning routine.

Starting last week, we began coverage of habits on intellectual stimulation and productivity. Specifically, last week’s habit was “reading two pages”. This week, we’re moving onward with doing a daily technical exercise; in my case, this is either a mathematics, computer science, or programming exercise.

The reason why I have this daily-technical-exercise habit is that data science is both a limitlessly broad field as well as an ever-evolving field. If we keep learning on a regular basis, we can expand our capabilities and open doors to new professional opportunities. This is one of the driving ideas behind the #66daysofdata hashtag, which — if you haven’t heard of it before — is detailed in episode #555 with Ken Jee, who originated the now-ubiquitous hashtag.

Read MoreEngineering Data APIs

How you design a data API from scratch and how a data API can leverage machine learning to improve the quality of healthcare delivery are topics covered by Ribbon Health CTO Nate Fox in this week's episode.

Ribbon Health is a New York-based API platform for healthcare data that has raised $55m, including from some of the biggest names in venture capital like Andreessen Horowitz and General Catalyst.

Prior to Ribbon, Nate:

• Worked as an Analytics Engineer at the marketing start-up Unified.

• Was a Product Marketing Manager at Microsoft.

• Obtained a mechanical engineering degree from the Massachusetts Institute of Technology and an MBA from Harvard Business School.

In this episode, Nate details:

• What APIs ("application programming interfaces") are.

• How you design a data API from scratch.

• How Ribbon Health’s data API leverages machine learning models to improve the quality of healthcare delivery.

• How to ensure the uptime and reliability of APIs.

• How scientists and engineers can make a big social impact in health technology.

• His favorite tool for easily scaling up the impact of a data science model to any number of users.

• What he looks for in the data scientists he hires.

Today’s episode has some technical data science and software engineering elements here and there, but much of the conversation should be interesting to anyone who’s keen to understand how data science can play a big part in improving healthcare.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #7: Read Two Pages

At the beginning of the new year, in Episode #538, I introduced the practice of habit tracking and provided you with a template habit-tracking spreadsheet. Then, we had a series of Five-Minute Fridays that revolved around daily habits I espouse and that theme continues today. The habits we covered in January and February were my morning habits, specifically:

Starting the day with a glass of water

Making my bed

Carrying out alternate-nostril breathing

Meditating

Writing morning pages

Now, we’ll continue on with habits that extend beyond just my morning with a block of habits on intellectual stimulation and productivity. Specifically, today’s habit is “reading two pages”.

Read MoreGPT-3 for Natural Language Processing

With its human-level capacity on tasks as diverse as question-answering, translation, and arithmetic, GPT-3 is a game-changer for A.I. This week's brilliant guest, Melanie Subbiah, was a lead author of the GPT-3 paper.

GPT-3 is a natural language processing (NLP) model with 175 billion parameters that has demonstrated unprecedented and remarkable "few-shot learning" on the diverse tasks mentioned above (translation between languages, question-answering, performing three-digit arithmetic) as well as on many more (discussed in the episode).

Melanie's paper sent shockwaves through the mainstream media and was recognized with an Outstanding Paper Award from NeurIPS (the most prestigious machine learning conference) in 2020.

Melanie:

• Developed GPT-3 while she worked as an A.I. engineer at OpenAI, one of the world’s leading A.I. research outfits.

• Previously worked as an A.I. engineer at Apple.

• Is now pursuing a PhD at Columbia University in the City of New York specializing in NLP.

• Holds a bachelor's in computer science from Williams College.

In this episode, Melanie details:

• What GPT-3 is.

• Why applications of GPT-3 have transformed not only the field of data science but also the broader world.

• The strengths and weaknesses of GPT-3, and how these weaknesses might be addressed with future research.

• Whether transformer-based deep learning models spell doom for creative writers.

• How to address the climate change and bias issues that cloud discussions of large natural language models.

• The machine learning tools she’s most excited about.

This episode does have technical elements that will appeal primarily to practicing data scientists, but Melanie and I put an effort into explaining concepts and providing context wherever we could so hopefully much of this fun, laugh-filled episode will be engaging and informative to anyone who’s keen to learn about the start of the art in natural language processing and A.I.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Jon’s Answers to Questions on Machine Learning

The wonderful folks at the Open Data Science Conference (ODSC) recently asked me five great questions on machine learning. I thought you might like to hear the answers too, so here you are!

Their questions were:

1. Why does my educational content focus on deep learning and on the foundational subjects underlying machine learning?

2. Would you consider deep learning to be an “advanced” data science skill, or is it approachable to newcomers/novice data scientists?

3. What open-source deep learning software is most dominant today?

4. What open-source deep learning software are you looking forward to using more?

5. Do you have a case study where you've used deep learning in practice?

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

ODSC's blog post of our Q&A is here.

SuperDataScience Podcast LIVE at MLconf NYC and ScaleUp:AI!

It's finally happening: the first-ever SuperDataScience episodes filmed with a live audience! On March 31 and April 7 in New York, you'll be able to react to guests and ask them questions in real-time. I'm excited 🕺

The first live, in-person episode will be filmed at MLconf NYC on March 31st. The guest will be Alexander Holden Miller, an engineering manager at Facebook A.I. Research who leads bleeding-edge work at mind-blowing intersections of deep reinforcement learning, natural language processing, and creative A.I.

A week later on April 7th, another live, in-person episode will be filmed at ScaleUp:AI. I'll be hosting a panel on open-source machine learning that features Hugging Face CEO Clem Delangue.

I hope to see you at one of these conferences, the first I'll be attending in over two years! Can't wait. There are more live SuperDataScience episodes planned for New York this year and hopefully it won't be long before we're recording episodes live around the world.

My Favorite Calculus Resources

It's my birthday today! In celebration, I'm delighted to be releasing the final video of my "Calculus for Machine Learning" YouTube course. The first video came out in May and now, ten months later, we're done! 🎂

We published a new video from my "Calculus for Machine Learning" course to YouTube every Wednesday since May 6th, 2021. So happy that it's now complete for you to enjoy. Playlist is here.

More detail about my broader "ML Foundations" curriculum (which also covers subject areas like Linear Algebra, Probability, Statistics, Computer Science) and all of the associated open-source code is available in GitHub here.

Starting next Wednesday, we'll begin releasing videos for a new YouTube course of mine: "Probability for Machine Learning". Hope you're excited to get going on it :)

Effective Pandas

Seven-time bestselling author Matt Harrison reveals his top tips and tricks to enable you to get the most out of Pandas, the leading Python data analysis library. Enjoy!

Matt's books, all of which have been Amazon best-sellers, are:

1. Effective Pandas

2. Illustrated Guide to Learning Python 3

3. Intermediate Python

4. Learning the Pandas Library

5. Effective PyCharm

6. Machine Learning Pocket Reference

7. Pandas Cookbook (now in its second edition)

Beyond being a prolific author, Matt:

• Teaches "Exploratory Data Analysis with Python" at Stanford

• Has taught Python at big organizations like Netflix and NASA

• Has worked as a CTO and Senior Software Engineer

• Holds a degree in Computer Science from Stanford University

On top of Matt's tips for effective Pandas programming, we cover:

• How to squeeze more data into Pandas on a given machine.

• His recommended software libraries for working with tabular data once you have too many data to fit on a single machine.

• How having a computer science education and having worked as a software engineer has been helpful in his data science career.

This episode will appeal primarily to practicing data scientists who are keen to learn about Pandas or keen to become an even deeper expert on Pandas by learning from a world-leading educator on the library.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Sports Analytics and 66 Days of Data with Ken Jee

Ken Jee — sports analytics leader, originator of the ubiquitous #66daysofdata hashtag, and data-science YouTube superstar (190k subscribers) — is the guest for this week's fun and candid episode ⛳️🏌️

In addition to his YouTube content creation, Ken:

• Is Head of Data Science at Scouts Consulting Group LLC.

• Hosts the "Ken's Nearest Neighbors" podcast.

• Is Adjunct Professor at DePaul University.

• Holds a Masters in Computer Science with an AI/ML concentration.

• Is renowned for starting #66daysofdata, which has helped countless people create the habit of learning and working on data science projects every day.

Today’s episode should be broadly appealing, whether you’re already an expert data scientist or just getting started.

In this episode, Ken details:

• What sports analytics is and specific examples of how he’s made an impact on the performance of athletes and teams with it.

• Where the big opportunities lie in sports analytics in the coming years.

• His four-step process for how someone should get started in data science today.

• His favorite tools for software scripting as well as for production code development.

• How the #66daysofdata can supercharge your capacity as a data scientist whether you’re just getting started or are already an established practitioner.

Thanks to Christina, 🦾 Ben, Serg, Arafath, and Luke for great questions for Ken!

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Jon’s Deep Learning Courses

This article was originally adapted from a podcast, which you can check out here.

Sometimes, during guest interviews, I mention the existence of my deep learning book or my mathematical foundations of machine learning course.

It recently occurred to me, however, that I’ve never taken a step back to detail exactly what content I’ve published over the years and where it’s available if you’re interested in it. So, today I’m dedicating a Five-Minute Friday specifically to detailing what all of my deep learning content is and where you can get it. In next week’s episode, I’ll dig into my math for machine learning content. But, yes, for today, it’s all about deep learning.

Read MoreThe Statistics and Machine Learning Quests of Dr. Josh Starmer

Holy crap, it's here! Joshua Starmer, the creative genius behind the StatQuest YouTube channel (over 675k subscribers!) joins me for an epic episode on stats, ML, and his learning and communication secrets.

Dr. Starmer:

• Provides uniquely clear statistics and ML education via his StatQuest You Tube channel.

• Is Lead A.I. Educator at Grid.ai, a company founded by the creators of PyTorch Lightning that enables you to take an ML model you have on your laptop and train it seamlessly on the cloud.

• Was a researcher at the University of North Carolina at Chapel Hill for 13 years, first as a postdoc and then as an assistant professor, applying statistics to genetic data.

• Holds a PhD in Biomathematics and Computational Biology.

• Holds two bachelor degrees, one in Computer Science and another in Music.

In this episode filled with silliness and laughs from start to finish, Josh fills us in on:

• His learning and communication secrets.

• The single tool he uses to create YouTube videos with over a million views.

• The software languages he uses daily as a data scientist.

• His forthcoming book, "The StatQuest Illustrated Guide to Machine Learning".

• Why he left his academic career.

• A question you might want to ask yourself to check in on whether you’re following the right life path yourself.

Today’s epic episode is largely high level and so will appeal to anyone who likes to giggle while hearing from one of the most intelligent and creative minds in education on data science, machine learning, music, genetics, and the intersection of all of the above.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Thanks to Serg, Nikolay, Phil, Jonas, and Suddhasatwa for great audience questions!

The Most Popular SuperDataScience Episodes of 2021

This article was originally adapted from a podcast, which you can check out here.

2021 was my first year hosting the SuperDataScience podcast and, boy, did I ever have a blast. Filming and producing episodes for you has become the highlight of my week. So, thanks for listening — this show wouldn’t exist without you and I hope I can continue to deliver episodes you love for years and years to come.

Speaking of episodes you love, it’s now been more than 30 days since the final episode of 2021 aired. Internally at the SuperDataScience podcast, we use the 30-day mark after an episode’s been released as our quantitative Key Performance Indicator as to how an episode’s been received by you. Episodes accrue tons more listens after the 30 day mark, but we can use that time point after each episode to effectively compare relative episode popularity.

So, you might have your own personal favorites from 2021 but let’s examine the data and see which — quantitatively speaking — were the top-performing episodes of the year.

Read MoreDeep Reinforcement Learning — with Wah Loon Keng

For an intro to Deep Reinforcement Learning or to hear about the latest research and applications in the field (which is responsible for the most cutting-edge "A.I."), today's episode with Wah Loon Keng is for you.

Keng:

• Co-authored the exceptional book "Foundations of Deep Reinforcement Learning" alongside Laura Graesser.

• Co-created SLM-Lab, an open-source deep reinforcement learning framework written with the Python PyTorch library.

• Is a Senior A.I. Engineer at AppLovin, a marketing solutions provider.

In this episode, Keng details:

• What reinforcement learning is.

• A timeline of major breakthroughs in the history of Reinforcement Learning, including when and how Deep RL evolved.

• Modern industrial applications of Deep RL across robotics, logistics, and climate change.

• Limitations of Deep RL and how future research may overcome these limitations.

• The industrial robotics and A.I. applications Deep RL could play a leading role in in the coming decades.

• What it means to be an A.I. engineer and the software tools he uses daily in that role.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #6: Write Morning Pages

This article was originally adapted from a podcast, which you can check out here.

At the beginning of the new year, in Episode #538, I introduced the practice of habit tracking and provided you with a template habit-tracking spreadsheet. Since then, Five-Minute Fridays have largely revolved around daily habits and that theme continues today. Indeed, having covered most of my morning habits already, namely:

Starting the day with a glass of water

Making my bed

Carrying out alternate-nostril breathing

Meditating

We’ve now reached my final morning habit, which is to compose something called morning pages.

I learned about the concept of morning pages from Julia Cameron’s book The Artist’s Way. It may seem hard to believe now that I’m releasing two podcast episodes and a YouTube tutorial every single week, but five years ago I had staggeringly little creative capacity. I excelled at evaluating other peoples’ ideas and I could execute on ideas very well once they were passed to me, but I self-diagnosed that if I was going to flourish as a data scientist and entrepreneur, I’d need to hone my creativity.

Read MoreEngineering Natural Language Models — with Lauren Zhu

Zero-shot multilingual neural machine translation, how to engineer natural language models, and why you should use PCA to choose your job are topics covered this week by the fun and brilliant Lauren Zhu.

Lauren:

• Is an ML Engineer at Glean, a Silicon Valley-based natural language understanding company that has raised $55m in venture capital.

• Prior to Glean, she worked as an ML Intern at both Apple and the autonomous vehicle subsidiary of Ford Motor Company; as a software engineering intern at Qualcomm; and as an A.I. Researcher at The University of Edinburgh.

• Holds BS and MS degrees in Computer Science from Stanford

• Served as a teaching assistant for some of Stanford University’s most renowned ML courses such as "Decision Making Under Uncertainty" and "Natural Language Processing with Deep Learning".

In this episode, Lauren details:

• Where to access free lectures from Stanford courses online.

• Her research on Zero-Shot Multilingual Neural Machine Translation.

• Why you should use Principal Component Analysis to choose your job.

• The software tools she uses day-to-day at Glean to engineer natural language processing ML models into massive-scale production systems.

• Her surprisingly pleasant secret to both productivity and success.

There are parts of this episode that will appeal especially to practicing data scientists but much of the conversation will be of interest to anyone who enjoys a laugh-filled conversation on A.I., especially if you’re keen to understand the state-of-the-art in applying ML to natural language problems.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #5: Meditate

This article was originally adapted from a podcast, which you can check out here.

At the beginning of the new year, in Episode #538, I introduced the practice of habit tracking and provided you with a template habit-tracking spreadsheet. Since then, Five-Minute Fridays have largely revolved around daily habits and that theme continues today with my daily habit of meditation.

If you’ve been listening to SuperDataScience episodes for more than a year, you’ll be familiar with my meditation practice already, as I detailed it back in Episodes #434 and 436 — episodes on what I called “attention-sharpening tools”. You can refer back to those episodes to hear all the specifics, but the main idea is that every single day — for thousands of consecutive days now — I go through a guided meditation session using the popular Headspace application.

Read MoreHow Genes Influence Behavior — with Prof. Jonathan Flint

How do genes influence behavior? This week's guest, Prof. Jonathan Flint, fills us in, with a particular focus on how machine learning is uncovering connections between genetics and psychiatric disorders like depression.

In this episode, Prof. Flint details:

• How we know that genetics plays a role in complex human behaviors incl. psychiatric disorders like anxiety, depression, and schizophrenia.

• How data science and ML play a prominent role in modern genetics research and how that role will only increase in years to come.

• The open-source software libraries that he uses for data modeling.

• What it's like day-to-day for a world-class medical sciences researcher.

• A single question you can ask to prevent someone committing suicide.

• How the future of psychiatric treatments is likely to be shaped by massive-scale genetic sequencing and everyday consumer technologies.

Jonathan:

• Is Professor-in-Residence at the University of California, Los Angeles, specializing in Neuroscience and Genetics.

• Leads a gigantic half-billion dollar project to sequence the genomes of hundreds of thousands of people around the world in order to better understand the genetics of depression.

• Originally trained as a psychiatrist, he established himself as a pioneer in the genetics of behavior during a thirty-year stint as a medical sciences researcher at the University of Oxford.

• Has authored over 500 peer-reviewed journal articles and his papers have been cited an absurd 50,000 times.

• Wrote a university-level textbook called "How Genes Influence Behavior", which is now in its second edition.

Today’s episode mentions a few technical data science details here and there but the episode will largely be of interest to anyone who’s keen to understand how your genes influence your behavior, whether you happen to have a data science background or not.

Thanks to Mohamad, Hank, and Serg for excellent audience questions!

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #4: Alternate-Nostril Breathing

This article was originally adapted from a podcast, which you can check out here.

Back in Episode #538, I kicked off the new year of Five-Minute Fridays by introducing the practice of habit-tracking, including providing you with a template habit-tracking spreadsheet. I followed that up in Episodes #540 and 544 by detailing for you my habits of starting the day with a glass of water and making my bed, respectively.

Continuing on with my morning habits, today’s episode is about alternate-nostril breathing (ANB).

ANB is often associated with yoga classes so if you do a lot of yoga, you may have encountered this technique before. However, there’s no reason why you can’t duck into a quick ANB session for a couple of minutes at any time. I like having it as one of my morning rituals because it makes me feel centered, focused, and present; as a result, I find myself both enjoying being alive and ready to tackle whatever’s going to come at me through the day. That said, if I’m feeling particularly stressed out or out of touch with the present moment, I might quickly squeeze in a few rounds of ANB at any time of day.

Read More